|

ImFusion C++ SDK 4.4.0

|

|

ImFusion C++ SDK 4.4.0

|

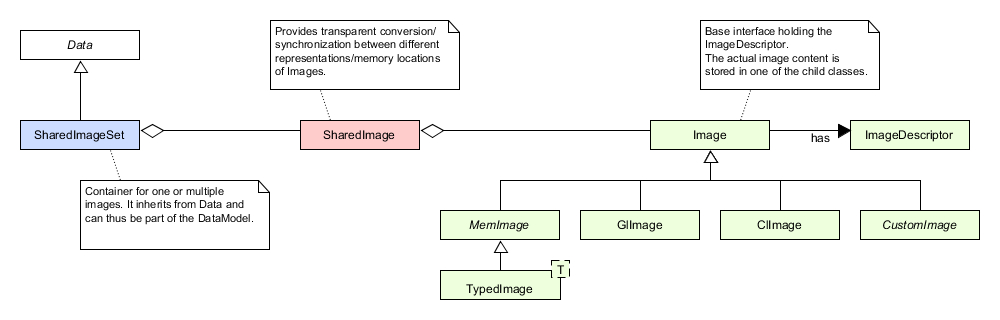

All kinds of image data available to Algorithms, including 3D volumes, are provided as a SharedImageSet. A SharedImageSet can contain multiple images, however, in most cases it will only consist of a single one (i.e. a list with a single item). Because of this common case, all access methods can also be used without an explicit index by implicitly using the focus frame of the Selection object assigned to each SharedImageSet.

SharedImageSet models shared ownership of its images inside, which means they are stored in a std::shared_ptr internally. This enables you need to reference an image inside a SharedImageSet from another SharedImageSet, for instance to run an Algorithm on a subset of your image data. For such use cases, use SharedImageSet::getShared() or SharedImageSet::images() to access them. However, be aware that if multiple algorithms run on the same image data, their side effects affect each other.

The Selection allows to restrict the number of images that are used by an algorithm. Not all algorithms are required to support SharedImageSets with multiple images and complex selections. Instead algorithms are allowed to treat a set of multiple images as a single image by only using the focus frame of the Selection. This way, algorithms designed for single images can still be used on sets of multiple images by changing the selection and calling the single-image algorithm multiple times.

To simplify the extraction of the selected images in a set, you can call SharedImageSet::selectedImages(). Selections are not enforced by the framework, i.e. algorithms can ignore the selection altogether and retrieve any image in the set. Ignoring the selection (apart from only using the focus) will lead to inconsistent user experience and should be avoided.

The default constructor will create an empty SharedImageSet. You can add any number of images by using one of the overloads of SharedImageSet::add() taking an Image or a SharedImage. For convenience, constructor overloads are available to directly initialize the SharedImageSet with an image.

There are different ways to access the image data of a SharedImageSet:

For convenience the SharedImageSet::mem() and SharedImageSet::gl() methods enable you to directly retrieve the MemImage or GlImage, respectively:

The SharedImageSet::CloneOptions enable you to define which aspects of a SharedImageSet you want to copy and which not. For instance, in situations where you want to copy the structure and all metadata (matrices, DataComponents, ...) of the input SharedImageSet, but use different pixel data, the following pattern is useful:

The items of a SharedImageSet are of type SharedImage. A SharedImage wraps the actual image data which can reside in different memory locations, e.g. the main memory (RAM) or the local memory of a GPU (VRAM). Similar to SharedImageSet, SharedImage also uses std::shared_ptr to model ownership of the image data inside. In addition to wrapping the Image, SharedImage allows to attach additional information to an image, namely the image modality, a transformation matrix describing the pose of the image in the world coordinate system, as well as an optional Mask and Deformation.

SharedImage can hold multiple different image representations at the same time, for instance standard CPU RAM (MemImage / TypedImage) and OpenGL textures (GlImage). It will automatically take care of synchronization between them whenever you request a representation that is not available yet or marked dirty.

Since synchronization between image representations may invalidate their pointers, you should never keep persistent pointers (e.g. as member variables) to them.

A SharedImage must never be empty. Therefore, you must construct it from an existing image:

Use the available member functions to retrieve any representation:

All image representations of a SharedImage derive from the Image class. This class does not hold any actual image data but only contains an ImageDescriptor and offers a couple of convenience functions to the user. Concrete image data (i.e. pixel data) is stored in the child classes.

The ImageDescriptor class serves basic storage entity for the essential properties of an image. It does not store any image pixel data but only its meta data such as pixel type, dimensions, etc. Furthermore, it provides a set of convenience functions such as conversion between image coordinates and indices.

Images where the pixel data is stored in main memory (RAM) are modeled by the MemImage and TypedImage classes. A TypedImage<T> wraps a buffer holding the image data, where the template type determines the pointer type of the buffer. It derives from the abstract MemImage interface, which hides the template type and only provides access to a generic void pointer. Since SharedImage itself does not have a template type, it will return MemImages.

The pixel data is stored in a fashion where the x axis is the fastest moving component. In other words: the image data is saved in the order width -> height -> slices. Channels are saved interleaved, e.g. the first 4 pixels in a 3-channel buffer would look like rgbrgbrgbrgb.

There are several methods to create a MemImage instance (in the example below, a 3-channel 128x128 2D image of type float):

The following code illustrates access to the image data:

To access the image data from a MemImage, one can either cast the MemImage to the corresponding TypedImage or cast the void pointer to the correct pointer type.

A GlImage wraps an OpenGL texture and handles all calls to the OpenGL API. The texture format is automatically determined based on the type and the number of channels. If the GlImage is created from a MemImage, the data of the MemImage will be automatically uploaded to the GPU memory. A GlImage needs to be bound to a texture unit before it can be used with any OpenGL operations.

To get a better understanding of how to use the different image containers, the following example will create a very simple Algorithm that takes the square root of each pixel times a parameter and writes it back to the image.

The algorithm will work on any 2D image or 3D volume.

Additionally the algorithm supports a multiplier as Parameter.

In the compute method we first have to retrieve the image data we want to modify. For now, we only want to create a CPU based implementation, therefore we need a MemImage:

The input data m_image is a SharedImageSet which could potentially consist of multiple images. Our algorithm, however, will ignore that and only work on the focus image. The previous code is therefore just a short version of:

The mem method will automatically synchronize the image data if it is currently not available.

Using the MemImage, we can iterate over each pixel, retrieve its value, modify it and write it back to the image.

The valueDouble method returns the pixel value as an unnormalized double independent of the actual image type. This means, that for an image of type unsigned char, valueDouble(...) returns values between 0.0 and 255.0. For an image of type unsigned short, on the other hand, it would return values between 0.0 and 65535.0. The same holds for setValueDouble, which expects an unnormalized value of type double. The given value is directly cast to double, i.e. the value will not be clamped to a valid range and may overflow if it is to large/small. Note that both methods will not check if the passed indices are valid, the caller should perform the check before calling those methods!

Last but not least we need to tell the SharedImage that we just modified the MemImage data: